DrEureka Rewards, DR parameters, and Policies

We evaluate DrEureka on 3 tasks, quadruped globe walking, quadruped locomotion, and dexterous cube rotation. In this demo, we visualize the unmodified best DrEureka reward and DR parameters for each task and visualize the policy deployed in the training simulation environment as well as the real-world environment.

![<b>Walking Globe</b>, best DrEureka reward and DR parameters:

[sep]

assets/reward_functions/walking_globe.txt [sep] assets/domain_randomizations/walking_globe.txt](videos/tasks/walking_globe.png)

![<b>Cube Rotation</b>, best DrEureka reward and DR parameters:

[sep]

assets/reward_functions/cube_rotation.txt [sep] assets/domain_randomizations/cube_rotation.txt](videos/tasks/cube_rotation.png)

![<b>Forward Locomotion</b>, best DrEureka reward and DR parameters:

[sep]

assets/reward_functions/forward_locomotion.txt [sep] assets/domain_randomizations/forward_locomotion.txt](videos/tasks/forward_locomotion.png)

Simulation

Real

DrEureka responses shown within code block.Qualitative Comparisons

We have conducted systematic study on the benchmark quadrupedal locomotion task. Here, we present several qualitative results. See the full paper for details.

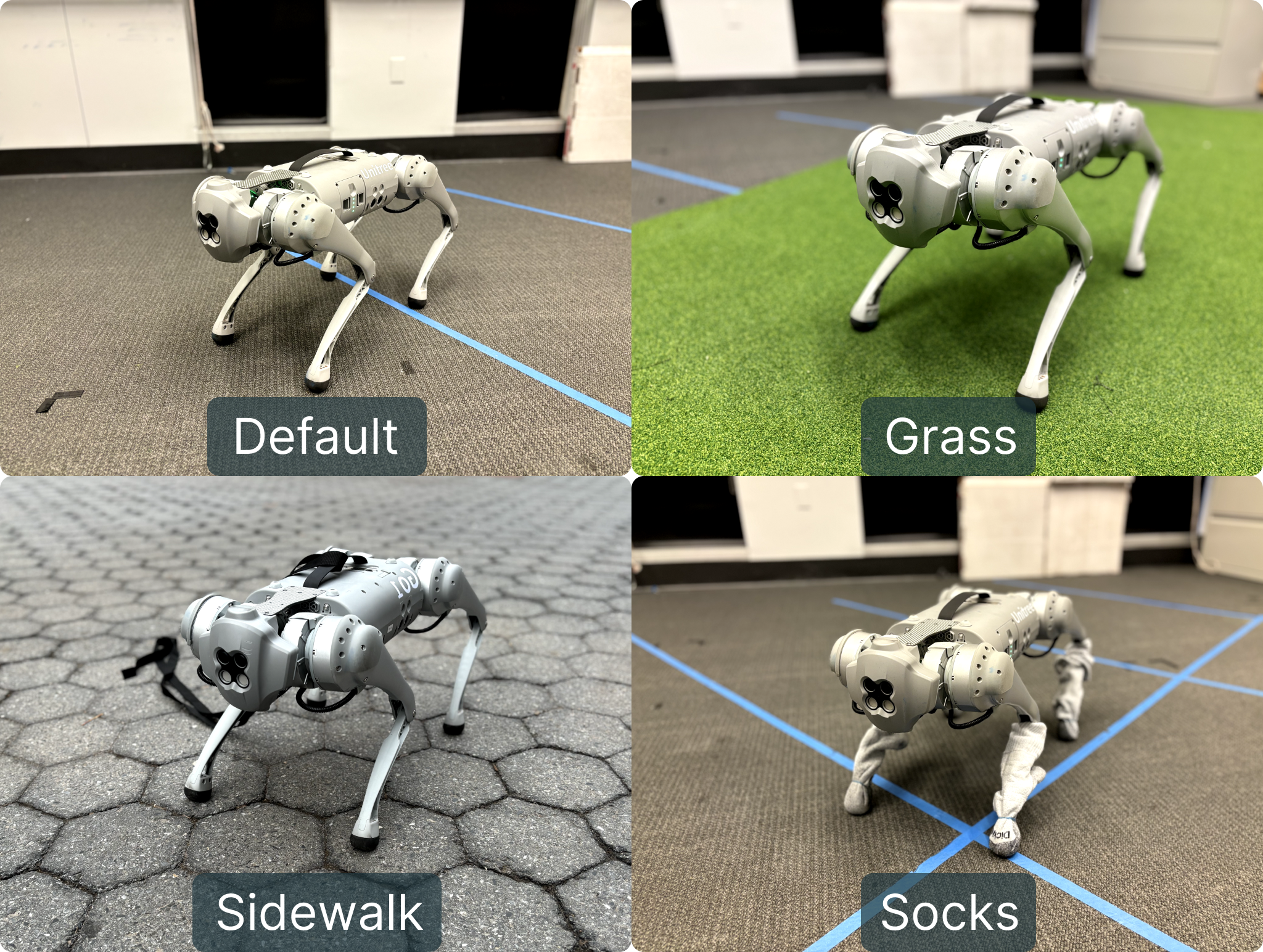

Terrain Robustness. On the quadrupedal locomotion task, we also systematically evaluate DrEureka policies on several real-world terrains and find they remain robust and outperform policies trained using human-designed reward and DR configurations.

The default as well as additional real-world environments to test DrEureka's robustness for quadrupedal locomotion.

DrEureka performs consistently across different terrains and maintains advantages over Human-Designed.

DrEureka performs consistently across different terrains and maintains advantages over Human-Designed.

DrEureka Safety Instruction. DrEureka's LLM reward design subroutine improves upon Eureka by incorporating safety instructions. We find this to be critical for generating reward functions safe enough to be deployed in the real world.

DrEureka Reward-Aware Physics Prior. Through extensive ablation studies, we find that using the initial Eureka policy to generate a reward-aware physics prior is crucial for the success of DrEureka. and then using LLM to sample DR parameters are critical for obtaining the best real-world performance.